EventMapper

EventMapper is a framework for generic real-world physical event detection under adversarial conditions. It's no longer under development; we have replaced it with a combination of MLFlow and EdnaML. See our papers:

- A Framework for Identifying Physical Events through Adaptive Social Sensor Data Filtering. ACM DEBS

- Event Detection in Noisy Streaming Data with Combination of Corroborative and Probabilistic Sources. IEEE CIC

- Concept Drift Adaptive Physical Event Detection for Social Media Streams. SCF

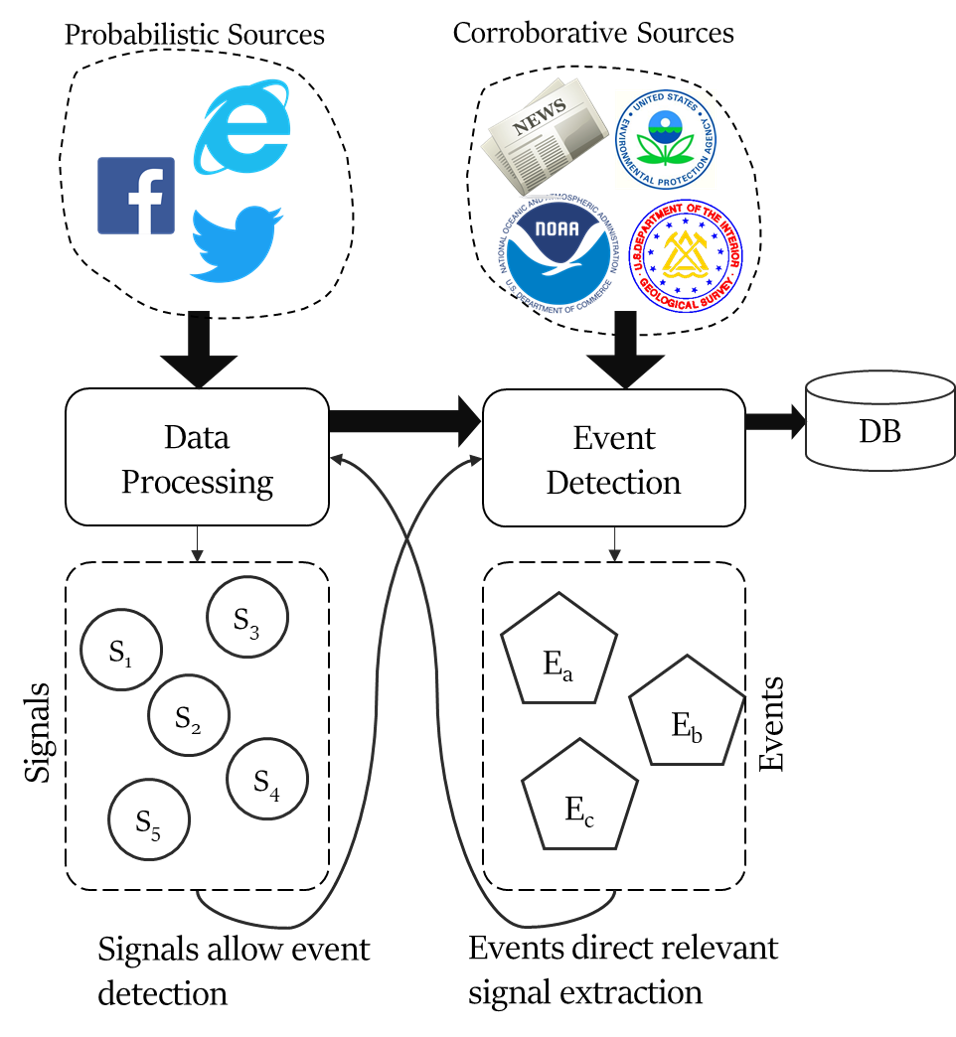

Event recognition is the classification and re-identification of relevant events over time. We can think of it as two intertwined processes with codependence: (1) Data processing, and (2) Event detection. Event detection requires processing raw real-world data to extract relevant signals, and data processing requires knowing which events to follow in the universe of events.

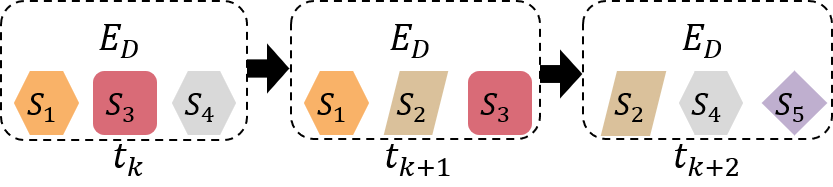

A common challenge in event recognition is concept drift, where the distribution of data changes continuously. Concept drift is well-documented in event recognition. Under concept drift, static event detection methods (rule-based, machine learning, or deep learning methods) exhibit performance degradation over time. So far, effec- tive concept drift adaptation strategies for noisy social media sources data remains unexplored; most work in drift detection and adaptation focuses on closed, small datasets with well known drift attributes.

We developed EventMapper to address this gap. EventMapper is a framework for event recognition that exploits the co-dependence of data processing and event detection to support long-term event recognition. So, EventMapper can adapt to concept drift. For demonstration purposes, we integrated two types of sources: high-confidence corroborative sources and low-confidence prob-abilistic sources for social sensor event detection.

Corroborative Sources. A high-confidence corroborative source is a dedicated physical or web-based sensor that provides annotated physical event information based on real human experts. Due to expert corroboration, corroborative sources have reduced noise and drift. However, corroboration increases their cost; so corrobora- tive sources have reduced coverage and higher latency.

Probabilistic Sources. A probabilistic source is any source without corroboration, such as raw web streams from non-experts. Lack of corroboration makes such streams, such as Twitter and Facebook, noisy and drifting (the exception are most verified official accounts on Twitter, e.g. journalists and government agencies). Fortunately, such sources are globally available and have low latency.

By integrating corroborative and probabilistic sources with co-dependence, EventMapper adapts to concept drift over time. Corroborative sources are used to fine-tune the data processing modules for social sensors. This allows data processing modules remain robust to drift when they use statistical and machine learning methods to extract relevant signals from probabilistic sources for event detection.

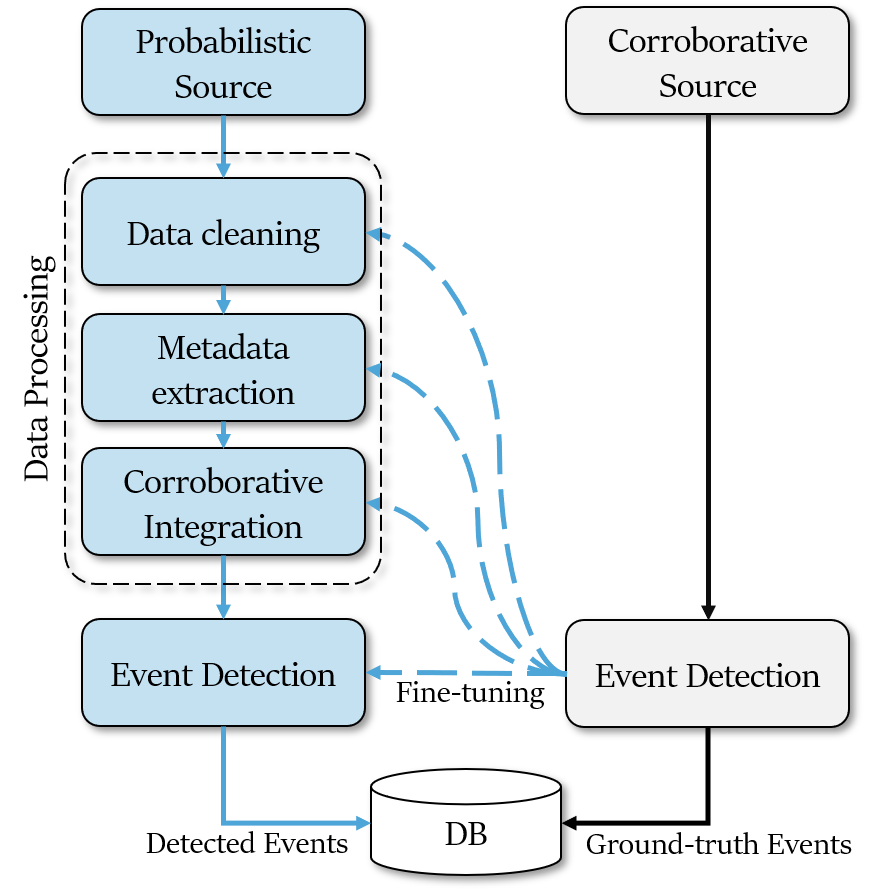

The EventMapper framework, shown above, integrates corroborative and probabilistic sources for dense, global, real-time physical event recognition. Ground truth events detected from corroborative sources are used to fine-tune data processing (which includes data cleaning, metadata extraction, and ML models for classification) for probabilistic sources. Continuous fine-tuning requires concept drift adaptation, which means updating data processing modules with the current stream’s distribution. Current approaches described in Related Work perform this update manually and in the closed dataset domain. EventMapper's advantage is in automating the continuous fine-tuning, allowing scalable drift adaptation that remains functional long after initial model construction. This allows the data processing steps to remain robust to concept drift.

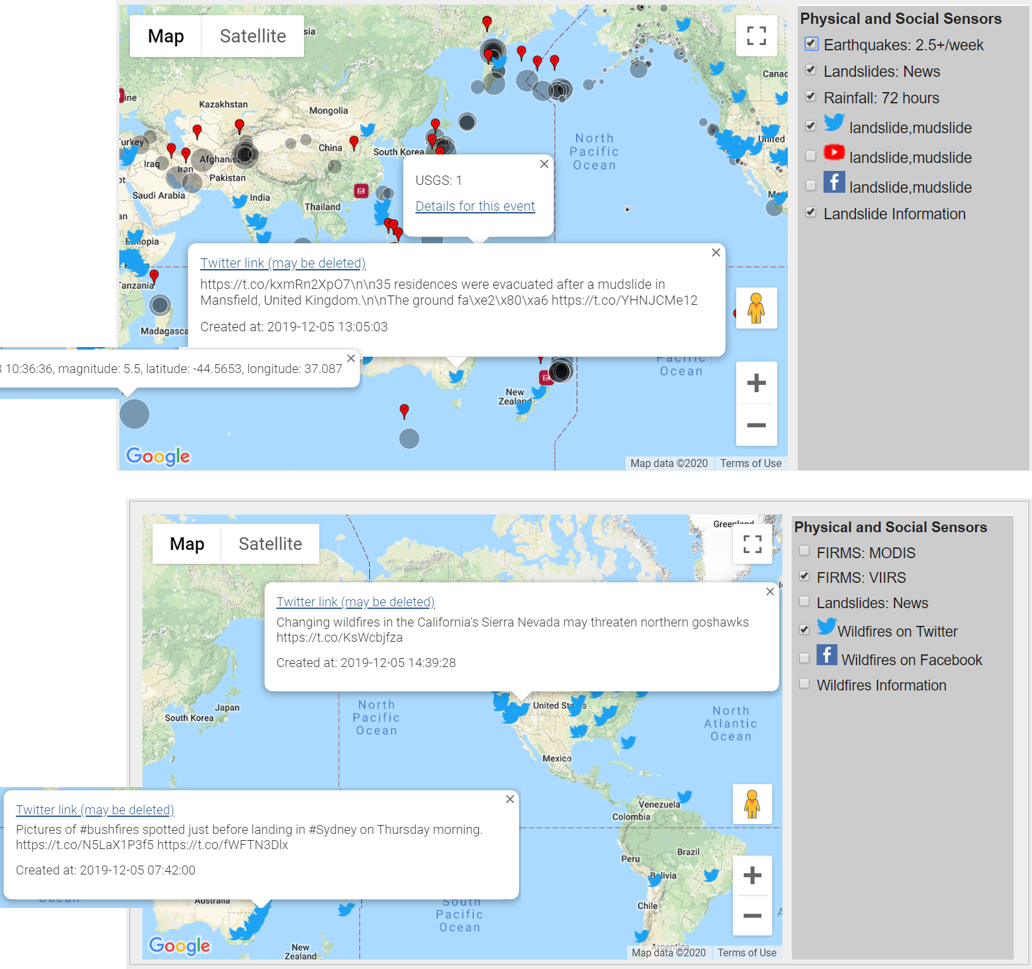

Application: Landslide detection

We demonstrated EventMapper for landslide detection. We select landslides as a desired event since they are a weak signal disaster with significant noise in social media streams; the use of the word landslide is polysemous, meaning it carries multiple meanings. The word landslide can refer to election landslides and a song Landslide (by Fleetwood Mac) in addition to the disaster landslide.

Corroborative Sources. We use four corroborative sources for landslide detection:

NASA TRMM provides landslide likelihood data in select locations around the globe. TRMM has three levels of predictions: 1-day, 3-day, and 7-day. Each prediction level provides: landslide likelihood using NASA’s landslide models, closest location name, and latitude/ longitude. EventMapper uses location name to update substring match list for NER fine-tuning. We use the 1-day landslide predictions.

USGS Earthquake provides detected earthquakes around the globe. For each instance, it provides magnitude and latitude/longitude of the epicenter.

NOAA GHCND provides daily rainfall data at NOAA weather stations around the globe. Each station provides its latitude and longitude, along with rain in the past day. Due to a combination of old equipment, budget cuts, and progressive expansion, many stations do not provide up-to-date information.

News articles about landslides provide late corroboration since they are delayed by 2-3 days.

EventMapper follows articles with an off-the-shelf API (NewsAPI) with the landslide disaster tag. Locations are extracted with NER since articles are long text and NER succeeds on the structured news text. We use NASA TRMM and News as landslide ground-truth data, where available. We combine USGS and GHCND data, since heavy rainfall and earthquake in the same location indicates high probability of landslide, to provide secondary ground-truth data.

In the corroborative integration process, we map ground-truth events and probabilistic source posts to a spatio-temporal grid. For probabilistic posts at the same time and geographic location as ground-truth events, EventMapper labels them as relevant posts. The labeled posts from corroborative integration are used to fine-tune ML classifiers in the Event Detection process’ Update Step. Each probabilistic post that could not be labeled with corroborative integration is processed with the Classification Step.

A screenshot of the final UI is shown below.

Since EventMapper continuously fine-tunes all stages of event recognition, from data cleaning (dynamic stopwords), metadata extraction (dynamic NER models), and classifiers (drift adaptive models), it is able to identify weak-signal events from 1-3 posts in addition to stronger-signal instances of weak-signal events (3% of events have more than 15 posts per event). Integrating corroborative and probabilistic sources allows EventMapper to take advantage of both source types: with ground-truth events from corroborative sources, EventMapper can continuously fine-tune all stages of an event detection pipeline; simultaneously, EventMapper ensures global coverage with probabilistic sources.